Publications

Published

Posters and Talks

In Progress

Research Interests

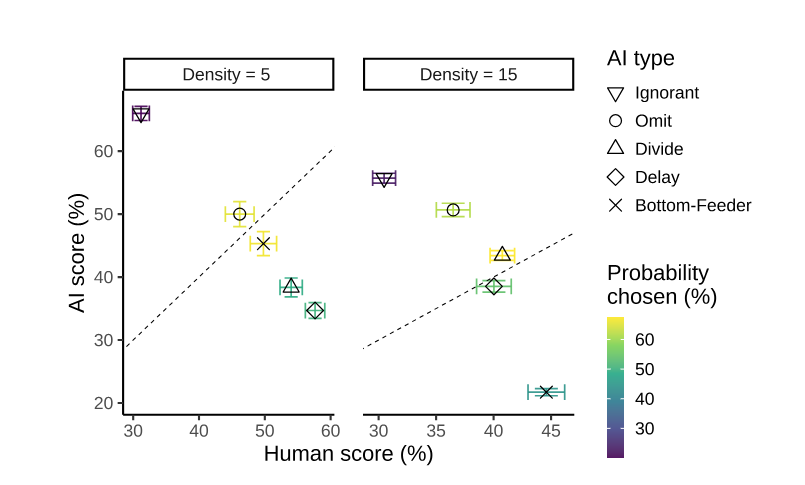

At heart, I study human decision-making. I have a particular interest in using Cognitive Models as a means of specifying the causal processes that give rise to people’s choices. Human-AI collaboration has become an extremely convenient outlet for me in this regard. For one, contemporary AI technologies’ applications in the real world offer plenty inspiration for my research. For example, I’m interested in how people trade-off the costs of outsourcing effortful cognitive labor to a computational agent, and how far you can push the attributes of this agent before eliciting a behavioral shift in the participant. Moreover, because Cognitive Models are mathematical in nature, casting my research through a lens of human-AI collaboration has immediate practical implications as solutions to “misaligned” behaviors are more readily generated from formal explanations of human behavior. In my research, the “AI” primarily serves as a theoretical tool to ask questions like: «How would people behave if they had access to an agent with X, Y properties?». That being said, I have a definitive interest in demonstrating the applied value of my work, meaning that some of my projects go the extra length of combining psychological models with algorithms from AI research.

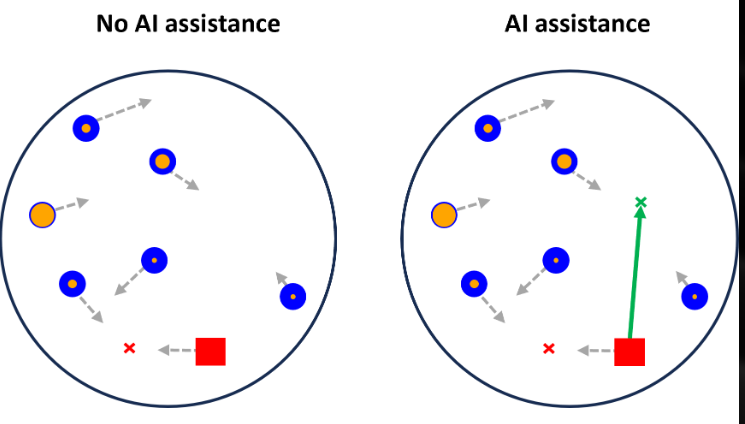

The project that currently enjoys most of my attention intentionally pairs participants with an incredibly annoying to use “AI” assistant to understand how the timing of AI-induced interruptions influences the participant’s motivation to permanently disable this agent. With a causal model of people’s annoyance decisions integrated, our simulations show it should be possible to estimate policies for the AI assistant that avoid interrupting the participant at times where advice is unlikely to be appreciated. Such policies could lead to much more sustainable and productive collaboration.