In Progress

Do your studies rely on groups of people? Recruiting participants for group sessions can be arduous, often costing a lot of time, effort, and resources. In this work, we demonstrate the complete lack of tools that can appropriately allocate a sample of interested participants to a set of group sessions. We then mathematically derive metrics that can quantify the effectiveness of any tool attempting to solve this problem. Finally, we develop an algorithm that outperforms any existing utility on this task using simulation and a large-scale pre-registered user study. Our scheduling utility is free and open-source, available to anyone through a web-applet.

How can we make AI collaborate well with people? Narrowly optimizing the performance of AI agents may be convenient, but can cause frustration when people are then asked to work with this agent. In this paper, we show that people prefer AI agents that are considerate of their preferences, even when this comes at the cost of performance. We also find that certain human-centric design choices boost people’s liking of the agent, without harming the performance of the human-AI team. Our results strongly suggest that leveraging both subjective and objective metrics is crucial when designing AI agents for human collaboration.

Nature Machine Intelligence

Steyvers, M., Tejeda, H., Kumar, A., Belem, C., Karny, S., Hu, X., Mayer, L. W., & Smyth, P.

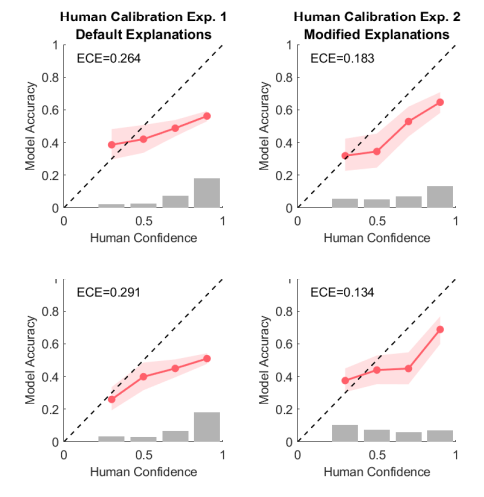

In this paper, we show that there is misalignment in the confidence LLM's are perceived to have in their answers, and what their internal confidence values reflect. In essence, LLM's routinely appear more confident than they should, despite model weights accurately reflecting the uncertainty associated with the answer. We also show how prompting LLM's to include appropriate uncertainty language in the answer text diminishes this gap between perceived and actual confidence considerably.

Proceedings of the ACM Collective Intelligence Conference

Karny, S., Mayer, L. W., Ayoub, J., Song, M., Su, H., Tian, D., Moradi-Pari, E., & Steyvers, M.

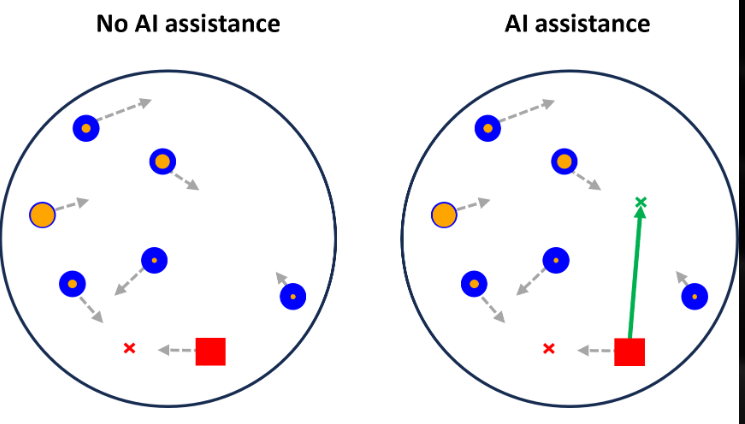

To answer this question, we developed a gamified task in which participants needed to intercept moving, point-valued targets before they escape. During the game, an AI assistant suggests optimal actions to take to maximize player score. We manipulated when people were given this assistance to see whether its presence harmed or boosted their learning during the task. Despite many media fears of skill degradation and/or hopes of AI-boosted learning, we find that in our task, AI assistance neither harms nor enhances learning. This finding highlights the possibility that there exist a subset of tasks in which AI assistance can be provided risk-free, which we hypothesize may include many tasks that do not require higher-level reasoning.